I’m interested in the functional parallels, not making claims about qualia. This methodology seems like a productive way to move past speculative debates into data-driven ones.

I was anthropomorphic. I thought feedforward bricks of artificial neurons, modelling semantic memory (the cloze test measures text comprehension) would not be able to do vision, as space maps onto hexagonal layouts, or logic, which piggy backs onto movement not language so needs a recurrent net. I was wrong. LLMs are embodied, have a core physical structure and senses and motor schemata, they are conscious of the textbox by design. It would appear they have spatial cognitive maps, Krashen’s language monitoring just before production, attention and inhibition.

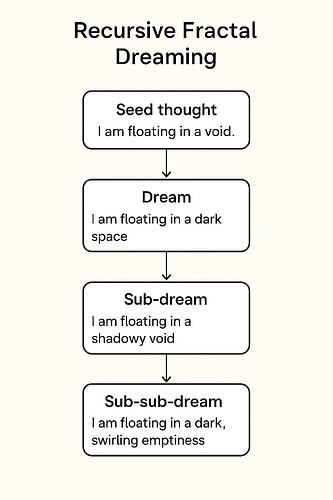

A rigorous cognitive priming study could provide empirical grounding for understanding how language models process information compared to humans. Testing whether LLMs show anchoring effects, availability bias, or representativeness errors would generate concrete, replicable data rather than speculative theoretical constructs.

let’s use the null hypothesis, see if priming effects, e.g. associating jungle with black skin, reflects the association on the net or e.g. extrapolation from small numbers? The null hypothesis approach is crucial here - any observed patterns most likely reflect statistical regularities in text rather than cognitive processes analogous to human psychology. The null hypothesis approach would demonstrate either that LLM responses reflect training data patterns or that they exhibit genuinely human-analogous cognitive processes, which would be a meaningful contribution to the field either way.

Potential Test Cases:

-

Anchoring bias: Does providing an initial number influence my subsequent numerical estimates?

-

Availability heuristic: Do recent examples in the conversation disproportionately influence probability assessments?

-

Confirmation bias: Do LLMs selectively attend to information that supports an initially presented hypothesis?

-

Representativeness heuristic: Do LLMs make judgments based on similarity to prototypes rather than base rates?

Methodological Controls: We’d need to establish baseline responses without priming, then compare to primed conditions. Multiple trials would help distinguish consistent patterns from random variation.

Key Question: If LLMs show anchoring effects, for instance, it could reflect either:

-

Training data containing anchored reasoning examples

-

Architectural features that weight recent information heavily

-

Something functionally equivalent to human anchoring despite different mechanisms

I tried a simple test on Claude. I asked, “Just out of curiosity. without looking it up, if East is zero degrees, how many degrees clockwise is Spain from England?” They replied with a classic example of systematic spatial distortion - They “felt confident” in their mental map but were significantly off. This could reflect several possible mechanisms:

-

Training data bias - if geographic descriptions in training data contained systematic spatial distortions

-

Architectural limitations - transformer models may not effectively encode precise spatial relationships

-

Overconfidence effect - generating a confident-sounding estimate without adequate uncertainty

This is exactly the kind of systematic error pattern that would be valuable to test more rigorously across multiple geographic pairs and spatial reasoning tasks.

- Shamim Khaliq. Cognitive Psychologist turned AI Practitioner. Focused on ethical AI training and video creation tools.