gemma-3-12b-it-ai-expert - A Fine-tuned Model for AI Core Technologies

🤗 Hugging Face | 🤖 ModelScope

This model is a specialized expert on core Artificial Intelligence concepts, developed by performing Instruction Supervised Fine-Tuning (SFT) on the google/gemma-3-12b-it model.

The fine-tuning was conducted using Low-Rank Adaptation (LoRA), a parameter-efficient technique, on a custom-built dataset. This process adapted the model to provide high-quality, detailed responses specifically within the domains of:

- Large Language Models (LLMs)

- Retrieval-Augmented Generation (RAG)

- AI Agents

The model was fine-tuned with LlaMA-Factory.

- Developed by: real-jiakai

- License: gemma

- Finetuned from model : google/gemma-3-12b-it

Usage

from transformers import pipeline

import torch

pipe = pipeline(

model="GXMZU/gemma-3-12b-it-ai-expert",

device="cuda",

torch_dtype=torch.bfloat16

)

messages = [

{

"role": "system",

"content": [{"type": "text", "text": "You are an AI expert assistant(Focus on LLM, RAG, and Agent Domain) to help with technical questions. You should provide clear, accurate, and helpful responses."}]

},

{

"role": "user",

"content": [

{"type": "text", "text": "What is MCP Protocol?"}

]

}

]

output = pipe(messages, max_new_tokens=200, temperature=0.1)

print(output[0]["generated_text"][-1]["content"])

Performance

The primary objective of this fine-tuning is to adapt the model to a specialized domain, enhancing its performance on specific tasks by injecting relevant knowledge and terminology while preserving its foundational generalist capabilities.

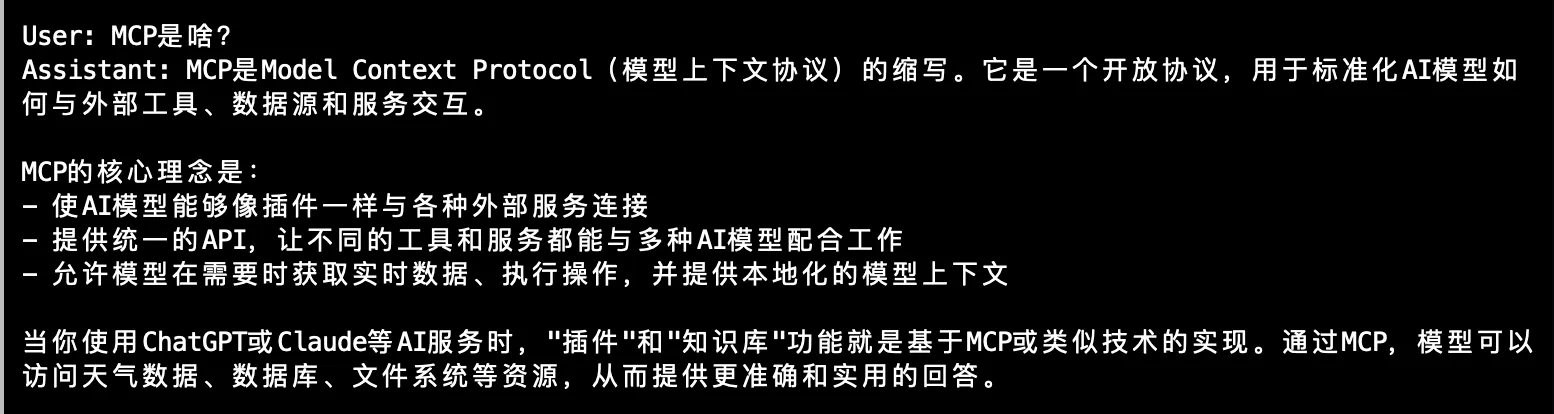

Before Fine-tuning vs After Fine-tuning

The model demonstrates significant improvements in domain-specific tasks related to LLM, RAG, and AI Agents, as shown in the example below:

Fine-tuning Procedure

Dataset

The model was fine-tuned on a custom, high-quality dataset. The dataset was carefully curated to cover three core areas:

- Large Language Models (LLM)

- Retrieval-Augmented Generation (RAG)

- AI Agents

Citation

If you use this model in your work, please cite it as:

@misc{gemma-3-12b-it-ai-expert,

author = {real-jiakai},

title = {gemma-3-12b-it-ai-expert},

year = 2025,

url = {https://huggingface.co/GXMZU/gemma-3-12b-it-ai-expert},

publisher = {Hugging Face}

}

@article{gemma_2025,

title={Gemma 3},

url={https://goo.gle/Gemma3Report},

publisher={Kaggle},

author={Gemma Team},

year={2025}

}

- Downloads last month

- 76